Posted on January 28, 2021

Risk-based Vulnerability Management for SME’s and Startups

I recently was asked a related question and it set me off thinking about how smaller companies could approach such a thing. So, in order to not waste the thought cycles, why not gather them into this blog post?

For SME’s or for organisations not large enough to have a dedicated security team, vulnerability management often involves an annual or quarterly internal/external vulnerability assessment. This generally involves a 2- or 3-day engagement that aims to capture a current snapshot of your network assets and associated vulnerabilities through the scope of an automated vulnerability scanning tool. Often the enterprise security scanning software used to deliver these services, strictly adhere to CVSS or some OWASP based risk taxonomy rating when grading your risks.

In my view this task can be performed by anybody with the ability to enter an IP Network range into a form and press the start button. Ideally you should get someone who knows what they are doing to set this up for you or to help interpret the results. There is only so much value an automated in-house process such as this can provide. Using Nessus or Qualys to do regular and continuous scanning provides useful insights and vulnerability information but it is by no means an all-encompassing solution. I’m happy to assist clients with setting this up in a way they can self-manage and leverage such a tool moving forward and provide documentation on how to best use these tools.

The meat of this post however is what you should be doing afterwards. You have your vulnerability scan results and everything is red or orange or green, now what do you do? You can’t fix everything; do you focus on the issues highlighted as critical? Do you try to deploy patches for everything? Do you panic? The answer of course, is that you hire a friendly penetration tester or knowledgeable security consultant with a deep insight into how these vulnerabilities are leveraged in the real world, to help you break down your assets and remediation plan into actionable chunks. You need to also do further testing to be aware of the critical issues these automated tools will not detect. There will be many.

Practical expertise in reproducing or finding vulnerabilities and determining the REAL-WORLD risk and impact of identified security issues is invaluable. Often attackers will go much further with identified vulnerabilities than can be merely determined by matching issues to a CVSS rating. You need to give penetration testers the right amount of time to correctly evaluate your exposure and risks. They need to have a full view of where the networks or applications they are testing fit within your overall business. What controls are in place to protect them and where potential issues can arise. It allows a much greater understanding to be formed on where your security risks are and allows more context-aware recommendations to be provided to protect your assets.

Making secure design decisions early will save you an incredible amount of grief or effort when your applications become more robust and you are trying to bootstrap in patches or trying to counter active threats. A “3- day Blackbox security review” in your early days probably won’t offset business security risks and you are setting yourself up to fail in future. Get security expertise involved early, train some staff in secure development or coding or outsource secure code reviews. You need to take this seriously as the modern internet punishes those who are complacent.

The best way to leverage a consultant’s time is to give them the documents or information they need to best understand your operation. Don’t stick Firewalls or services in their way to stop them properly testing your applications. Give them source code. Product insights can help them identify the areas you need to focus on and how you should proceed about increasing your overall security. The correct management of uncertainties combined with gaining a better understanding of the likely paths of exploitation, can greatly direct your efforts.

Risk-based vulnerability management differs from the results of a security scanning tool in that you can utilise intelligence-driven remediation and decisions. Leveraging threat intelligence services or greater expertise in the threat landscape or security field to guide where to best spend your time.

With a risk-based approach you ultimately should be focused on securing the areas that are most likely to be attacked by a real-world criminal. Knowledge of real-world attacks and an understanding of the real motivations (financial or information gathering in nature) can better guide your remediation efforts. There are a lot of different concerns that should factor into making a real-world risk calculation and the guidance of security expertise can ensure you prioritise issues that present the most immediate critical risks.

As information security is a rapidly changing and modern field. We don’t have a full view of all of the threats at any one time or have a large statistical and comprehensive historical data-set to model the risks reliably. We are nowhere near having a full enough picture to accurately calculate risk to the same extent that can be achieved in other fields by actuarial scientists. The people closest to decision making and implementing change in an organisation (ie. IT staff and business managers) need to factor in a correct evaluation of risk. Security expertise and threat intelligence can enhance these actions and solutions in a way that drives down risk in a holistic way.

The following are some areas where security risk considerations can enhance your vulnerability management and remediation prioritisation:

- The individual risk rating of each asset and associated threat models.

- Where each asset sits in an overall business context and the criticality of the business function it serves.

- The most up-to-date and continuous visibility of your current assets and their current vulnerabilities.

- Visibility of developing and active threats and the threat groups that target your particular industry.

- Visibility of what vulnerabilities are new and actively being exploited by malware or automatically propagated.

- The complexities involved in deploying patches for each asset or network.

- Where each of your assets or applications fall on a risk scale when compared to other applications or services? Apps widely used are more likely to be valuable to more attackers and targeted.

- Managing uncertainty, unknowns (0days) and the predictive prioritization of mitigation.

All pros and cons must be considered in each situation and for each asset or vulnerability. For advanced threat actors or attack campaigns, having threat intelligence and insight into tactics, techniques and procedures can lead to better security hardening. By understanding the paths threats usually take once a compromise occurs, it can encourage more thoughtful and effective security controls. You can prioritise and monitor for the right things or have the right network security or firewall granularity in place to introduce extra road blocks. It’s an advantage to know what attackers often do.

Introduce more barriers that attackers need to work around or increase your visibility in known to be risky areas. By being smarter about risk you can often increase the time it takes for attackers to carry out their malicious activity or increase the likelihood of you detecting them before they do. They are more likely to make mistakes or set off alarms. This gives vital extra time for would be threat hunters, Incident responders and network security folks to identify and neutralise the threat before it impacts revenue.

Conclusion

A risk-based approach to vulnerability management is essential in modern times and a threat aware approach or security conscious business can be crucial to building your success. Learn from the mistakes others have made. Doing nothing will make you a very likely target and victim. Trying to do too much and patch everything at the same time, will waste vital resources that could be better utilised by focusing on the security issues and risks that are more likely to be exploited. Balance and planning are essential. This is why modern and successful companies have started shifting to asset management, continuous security testing and bug bounty services. You need awareness of the most likely to-be-exploited risks at any time and to develop strategies that will maximize your efforts in an always changing environment. Secure foundations support taller buildings.

Posted on January 4, 2021

Offensive Anomaly Detection

I hope you are all keeping well and managing as best you can, under whatever lockdown circumstances you may find yourself in. In such stressful times, I find it’s very easy to overwork and subsequently burn out as diving into work takes my mind off the greater situation. Take your holidays when you can, watch your working hours and automate where possible to make things easier for yourself. With vaccines on the way, we’ll all hopefully be back to exploring meat-space in a few short months. For now, welcome to 2021: a hacking odyssey!

Something like this post has been fermenting in the back of my mind for quite a while and I was always too busy when I tried to write it, too distracted to clearly formulate thoughts. I’m going to attempt to touch on some of the fundamentals of blackbox web application security testing. How to approach and rationalize such a thing, what to spot when testing and some discussion of tooling, techniques and automation in general. I have a big year planned, I’ve written more code in 2020 than the rest of my life combined and I’ve been building some fun projects and fuzzing tools in rust and python to assist me with my escapades. I’ll also be undertaking some new business ventures in 2021.

Coverage

Back in 2016 when I wrote parameth, it was a simple proof of concept (poc) script to automate an attack that I was tired of doing manually via Burpsuite intruder (which was slow as hell back then). There were no other tools that implemented this same technique at the time which gave it some novelty and attention. It’s also what I’ve pursued with my follow up research and development as in the very nature of doing something others aren’t or doing things differently, you are more likely to explore new areas.

This poc had a great impact and got a shoutout in the Bug bounty Hunters Methodology V2 talk by Jason Haddix. The technique was expanded on further by the community and the idea was eventually fully integrated into Burpsuite via the param miner plugin and also developed further into a tool with Arjun, this was awesome to see as it’s a great technique (even if they weren’t inspired by parameth)! This script solved a simple problem I found myself coming up against often while blackbox testing. Through writing the script, it allowed me to change how I fundamentally thought about security testing and gave me some baseline code and concepts to work with that could be expanded on further as I continued developing my automation and tooling.

At a most basic level, finding additional input parameters identifies more functionality of a target application. This can also be applied to json/xml object parameters, request headers and cookies… essentially anywhere user or application input can be received and processed. Identifying more inputs means more coverage and a deeper exploration of the application attack surface. When it comes to security testing and fuzzing, coverage is hands-down the most important thing. Thanks to greater speed in my more recent iteration of tools, I now also fuzz the parameter value with common attacks to try uncover inputs it otherwise would have missed.

Coverage is also why whitebox testing generally yields more and better vulnerabilities than blackbox testing. When you can see all the inputs and what is done to them in the backend, you can spend more time formulating attacks instead of spending it blindly discovering attack surface. It’s also why, as a community, we should be majorly pushing for security testing to occur in the development stages and before security issues have a chance of ending up in production systems.

Since implementing a SDLC is more of a business problem and undertaking, it’s also particular to each business and their individual processes. It isn’t a simple generic technical problem I can solve, hack on or try to write code for. Thus, I’ve been focusing my research efforts on the more fun problem of how to catch vulnerabilities on production systems. This is why I will talk about application responses and the identification of anomalies within them, this very often leads to the discovery of unintentional useful behavior or directly to security vulnerabilities.

As with exploring and mapping an organisations network you need to map out as much of an application and it’s functionality as possible. This can be achieved through crawling the application endpoints and its client side code (Javascript), browsing through the app, brute forcing directories or files or reading and discovering API documentation. Ideally you want to be collecting all the documents, names, formats, emails, scripts, endpoints and parameters as you go as they can be used later to identify additional context and assist greatly when you start fuzzing.

Detection and Automation

For web applications, the vast majority of vulnerabilities can be detected by identifying different HTTP status code or response sizes returned from a request. Other indicators used can be the response times, new request headers or cookies being returned or any noticeable corruption or change of the response content. All of these things are detectable in an automated way and in essence are how DAST tools like Burpsuite work, albeit for specific vulnerabilities. This testing formula distilled is:

- Take a normal request

- Mutate it in some way

- Examine how it changes the response

- Compare it to previous responses

- Examine elsewhere in the application these inputs appear

This response could also be examined for signatures, particular keywords and error messages that indicate a known vulnerability type or fingerprint the underlying application libraries or functions in use. In my tools, I try and simply detect any difference in the response that might indicate there is something worth investigating.

The web application security community loves emphasizing how manual testing is superior to all automated testing but what they really mean by this, is that security testing requires human thought to manually interpret what is happening. Understanding the context around the payloads used, requests and responses being returned. All within the greater context of the application functionality and what the application is trying to achieve. HTTP requests being sent and responses being retrieved is an automated process that is performed by a program, regardless if there is someone reading and interpreting the results or not.

I spent a lot of time working on how to best mutate requests in a context aware way and then storing and highlighting the ones that caused interesting or unexpected responses. The idea being to fuzz like a demon from hell and then filter out the chaff and keep the interesting stuff or anomalies for a human eye to further examine. It’s partially automated manual testing, not a scan for known specific things which is how a large majority of tools work. I essentially built a more automated ffuf that stores full requests and response bodies so I can also use it for other things like detecting changes in an application over time, detect new headers/cookies and filter or fingerprint things more efficiently.

Some of the most interesting vulnerabilities can be found by sheer brute force fuzzing, I try replicate this by scanning apps with known vulnerabilities and seeing where my own tools can detect them or what can allow the vulnerability to be identified. In blackbox testing it is widely true that the people who are more persistent and try more things, more quickly, are the ones who find more vulnerabilities faster. Even when doing the same thing that has been done before, there is a new emphasis on optimization for speed. This allows time to be spent manually examining the things that matter.

Until skynet is sentient, no automated tool can have human context awareness. Think of testing tools like an extension of your capabilities, they do data collection and repetitive tasks quicker and better than you can and should be used for that. You still need to interpret the output in an intelligent way. Burpsuite repeater is going to be a useful tool as long as websites communicate over HTTP and is reliable for manually digging in when you have something interesting to poke at.

The field of information security is primarily concerned with securing information. When you are testing applications, it’s worth heavily considering what information the company may want to keep secret and secure. In both the context of the greater business and in the context of the data an application relies on to function. Often developers build functionality that isn’t aware of this or the data security context.

Developers tend to have a consistent perspective of treating data or information as something that needs be processed, as opposed to something that needs to be correctly hidden from prying eyes. So Pry like fuck. Where and how is the data stored, where and how is this sensitive/useful data accessed?

Think About Things!

Released in simpler times before the global pandemic, when things like massive climate change and impending world war were all we had to worry about. I want to leverage what was obviously the most catchy and funky song of 2020. I want you to think about things.

I want you think of an application like a baby you are trying to communicate with. You love your baby and you can’t wait to find out what the baby thinks about things (ie. your requests). You need to find the right thing or quick hack to make it speak your language, feed it the right inputs and simply listen and await lovingly for any interesting output. Like a real baby, applications generally first respond with cries, shits and tears.

You should not run out of new things to try, it shouldn’t be weird to hit a million requests on a single endpoint when you are fuzzing. Have you ran every payload list and permutation of all chars or payloads you can find? against every location in a request? every parameter, every directory, every header, every cookie, every index of every part of the data you are sending? Can you find new cookies, headers, parameters to target? DO YOU EVEN WANT YOUR BABY TO COMMUNICATE WITH YOU?

Try harder etc. You can’t find, what you don’t look for. What type of input is expected? do different types produce useful errors? How does it process the following data types?

- An integer

- A String

- XML / JSON / Serialized Object

- Binary / Hex / Url Encoding / Ascii / Non-Ascii / Unicode / Encrypted data

- Really large or really small instances of the above data

- Any mass assignment or type confusion possible (String -> array?)

- Null or special characters or symbols, spaces, no data, returns, newlines, any special characters?

- Can you overload the parameter by including it multiple times?

- Is their other similarly named parameter? Can you brute force them?

- Can you inject into the parameter name itself?

Additionally, how might the input be used and abused? Think about how the backend of the application may process the data you are sending.

- Is it later used as part of a backend request? (SSRF)

- Can you load local resources or traverse file directories? (LFI/ RFI/ Directory Traversal)

- Is it used in a template? (SSTI)

- Is it vulnerable to XSS or SQL or Command Injection or other injection types? (Code/Ldap/Xpath)

- If XML or JSON is it vulnerable to object injection? (XXE / Deserialization)

- What happens if you increment or decrement the integer referenced?

- What happens if you use a different user’s ID or signatures or tokens?

- Can you make requests or view response while unauthenticated?

- Can you view the response for the same request while logged in as a different user or under another session?

- Is DNS being resolved, what about other protocols in the parameter?

- Is Data mutated in the response?

- Is the info stored? Where is it reproduced in the application and how?

In April 2015 in a post on the Bugcrowd forum I gave some ideas of how a beginner could approach fuzzing a parameter. I’ve seen this exact info copy and pasted to multiple blog posts elsewhere. So I’ve repeated and built on it here in my own blog! When targeting an application, the above can also apply to any part of a request. What happens if you test for the same things in headers, cookies or the request body or URL path itself? There are too many things to try manually, start automating now!

Wrapping up

My name is Ciaran McNally and I’ve been security testing applications for 8+ years. I’m currently working as an independent consultant and contractor. A close friend and I are planning on launching a pretty unique security scanning service startup this year called Scanomaly. I do penetration testing and application security reviews. I have a high success rate of uncovering critical and high rated security issues and usually suggest a bare minimum of 5 days for a pentest. Feel free to reach out for any of your security consulting needs via info@securit.ie.

Posted on October 23, 2020

Carving a path!

Operating successfully for 5 and a half years, Securit Consulting has just hit its 100th completed contract! Am I finally qualified to hang with the console cowboys in cyberspace?

I’d like to firstly thank all 26 of my clients, old and new, for their continued trust and support as my growth and progress would not have been possible without you. I would especially like to thank the 3 boutique security companies I’ve subcontracted with and for the work they’ve thrown my way over the years. I’m also incredibly grateful to the clients who have referred me to new customers. I look forward to a continued working partnership with you all and the many challenges we may face together in future. I feel this is a good milestone to share some general comments on the work already done and where I am.

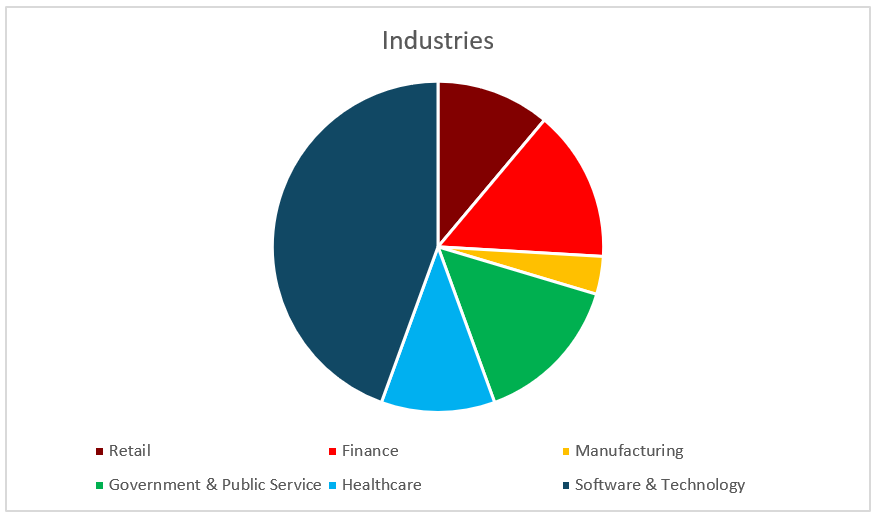

My clients range from well-known industry giants to startups and SMEs. They also operate in a large variety of different industries broadly categorized into the following areas:

This has exposed me to a wide variety of different security goals and priorities, varying from organizations focusing on the protection of research and intellectual property to a heavier focus on protecting customer PII and health data. Good business security practices are applicable across the board and can protect any organization working towards their unique objectives. A major focus for me is helping to get the basic practices and principles right first and foremost, before worrying about the more complex solutions available. When defences are best layered, you need solid foundations before you can build more extravagant structures on top.

Visibility

To me, this is the most critical component of any defence strategy. Visibility of your risks, visibility of your assets and visibility of the threats. Without it you will always be blindsided by the coming attack. The penalty for ignoring security can directly impact your revenue streams, with threats of large fines, ransomware and a loss of business through reputation damage or non-compliance. You can’t threat model and develop response strategies for the things you are not aware exist.

Security ignorance can impede your reaction time when responding to an active threat while also increase the time before you even realise you’ve been attacked, increasing the impact of an otherwise preventable situation. Having specialist security expertise and having seen a large variety of real attacks, hopefully puts me in a good position to help raise this critical awareness and improve your security visibility. All of your concerns can be combatted through good business practices, awareness, the remediation of known issues and the continuous validation of your security controls.

Types of contract

As far as the contracts go and in tackling ever changing businesss security concerns, I provide repeatable services using battle tested methodologies. I’ve broadly categorized these services and the work done into the following categories:

The product of these security deliverables is usually a report that contains technical remediation advice, a view of your assets through a clear security-focused lens, business strategy regarding how to focus or prioritise your efforts and guidance towards the business practices that can help add layers of protection to your defense.

Working freelance

The primary way I gain new leads is via referral. Only 7 of my contracts in total were acquired via social media (4 via LinkedIn, 3 via twitter). This is likely due to my own lack of skill in promotion or a well structured marketing strategy. I’m also just a small fish in a big pond. I decided to kick twitter and facebook back in June as I spent too much time on them with little return. It has also made me a million times more productive without having the stress of keeping up a positive and self-congratulatory web personality, not that I was ever a bundle of joy with widely agreeable perspectives on things…

Whether you are a company looking for a first security assessment or a company looking for their first REAL security assessment. I can deliver. I’ve seen enough poor reports and snake oil over my years to be confident that I produce high quality work with impactful advice for very fair prices. If you need consultation for any of your security concerns, feel free to reach out via info @ securit.ie.

Posted on February 4, 2019

The Lazy Hacker

It has been over a year since I last blogged, as reflected in this post’s name. My working situation has improved a lot over the past year which has led to me neglecting the previously enjoyed exercise of bug bounty hunting and blogging. I do very much intend on getting back into these activities at some stage but I’m kept busy for now. I work roughly 7 months of the year on contracts. Mainly taking the form of internal/external penetration tests and vulnerability assessments. I do still sometimes take on incident response jobs, many of which are subcontracted to me against my will 😉 I enjoy the rush of an active attack and witnessing the TTPs (techniques, tactics & procedures) of seasoned criminals but like you I can’t and won’t talk about any of that here because I’ll trigger my thousand yard stare.

So it’s a given that I have had 5 months of the year free to develop and encourage my own laziness via automation. That’s what this series of blog posts will focus on, hopefully in enough depth to make it a worthwhile read and potentially convince someone I’m worth contracting. I’d like to show the world some of the things I’ve been working on recently and share my mentality, toolkit and methodologies. I can guarantee a lot of this stuff is being done by others out there, as I like to read a lot and I didn’t pick up these ideas or skills from kicking rocks. It takes a lot of active learning and researching to stay on top of the latest security trends and techniques.

Many folks are indeed better than I at being cutting-edge, thanks to their active information circles, hard work and dedication to self-improvement. I still run tools like Nessus against infrastructure and am very fond of burp-suite, these tools compliment and can be used in conjunction with any of my original tools. They capture useful information very quickly, especially on internal environments. Nessus authenticated scans are very handy to have and I won’t stop using such tools any time soon. I want to thank everyone in this wonderful security community, who takes the time to openly share their trade-craft, hard lessons learned and research.

All I can share is what has worked for me consistently and maybe inspire some others with ideas or new avenues to explore. Since a lot of my stuff is self-developed, I may also eventually release more tools but the bulk of my toolkit probably won’t be shared, it’s my bread and butter and should help me find things others miss. Most things can also be easily accomplished with other tools already in the public domain. I develop for myself in python, it means I know my own tools inside out and can prioritize output in a way that the data becomes immediately actionable for me.

A Simplified Methodology

In order to avoid writing a book, I’ll try simplify and explain a lot of ideas as generally as I can. The following is a simplified penetration testing methodology. This should be effective against any online business, network or company, of any scale.

- Reconnaissance, OSINT and Information Gathering

- Network scanning, Service and application identification, vulnerability identification

- Exploitation & Escalation

I set out with a plan of automating as much as I could and especially where current tools or techniques were lacking, so at a minimal I was at least speeding up what I do manually. This should allow me to have more time for manual examination and fuzzing which increases my value by increasing the likelihood that more serious issues can be exposed in the time available.

Getting Started

⦁ who is the target? company? acquisitions? owners? developers?

⦁ where/how do they hire? where are they based? what tech do they use?

⦁ what’s their major product? what services do they provide?

⦁ 3rd parties they interact with? How do they accomplish their goals?

⦁ where are their networks? how are they managed? by who?

⦁ what issues have impacted them? what attacks have they seen before?

⦁ Company presentations? product demos? any URLs/tech used in them?

⦁ what do they want or need to avoid?

With an understanding and profile of the target, it’s time to start enumerating public network information resources. This is the first technical step of my workflow and will be the focus of this post.

Some key concepts I relish throughout, are that all data and technical information captured is useful, can be expanded on, should be stored and is continuously fed in a feedback loop manner from process to process. It is also essential to keep track of when, where and what you are testing. This can help if a client wants to dig into anything further. Keep a log of your own activities and enable logs for tools, it’ll save you heartache in the long run and allow you to retrace steps in future.

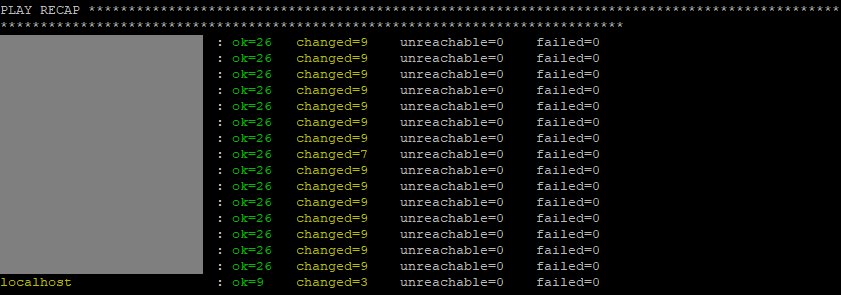

The final concept, is that I want everything I build to be easy to tear down and rebuild. I accomplish this through a series of ansible playbooks. This allows me to rapidly scale my tools out when needed and reproduce my architecture quickly on a fresh set of hosts. Ansible is perfect for me as an alternative to bash scripts and I can manage all of my infrastructure on the command line of a single administrative host.

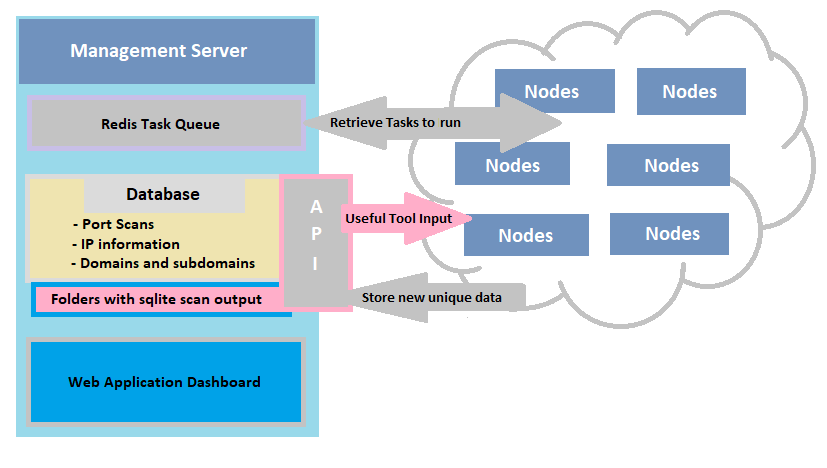

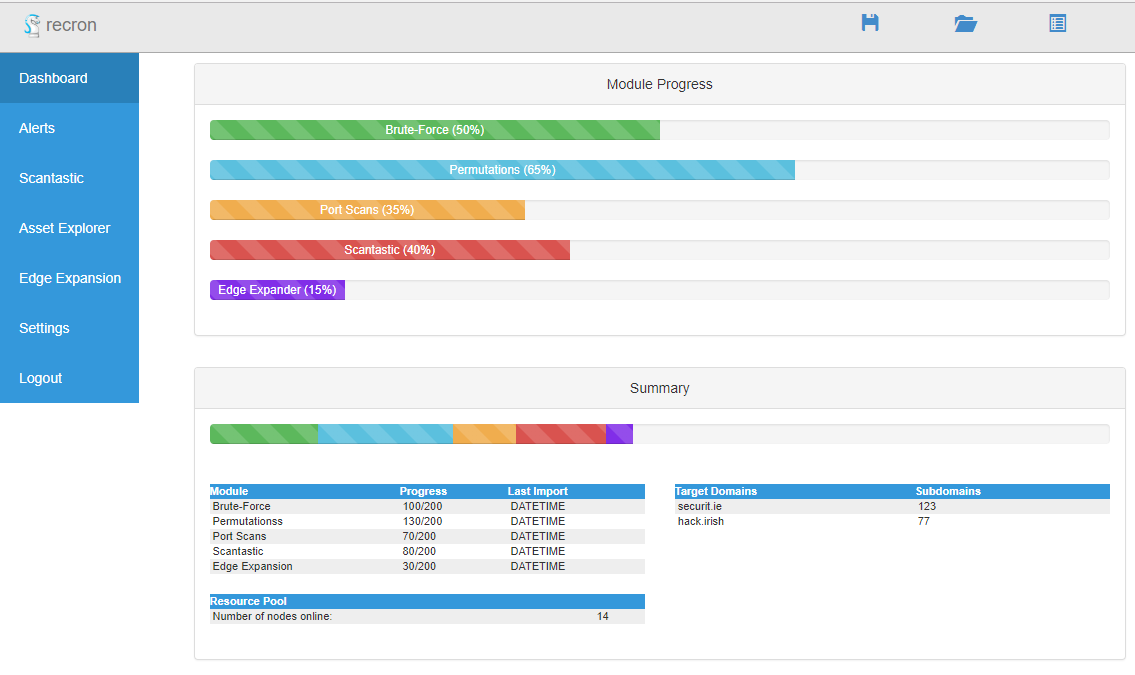

Capturing the Network

Before digging into the specifics I’d like to share the architecture behind one of my primary tools used for reconnaissance. This is a tool I developed called “recron” and is an automated continuous recon framework. It is composed of four main parts on the management side, a database – a redis task queue, an API and a web app/dashboard. And then a single orchestration client on any number of worker nodes to run my CLI tools. It scales quite well, I can leave it running over a week with no hiccups and I have no idea what to do with this beautiful beast yet.

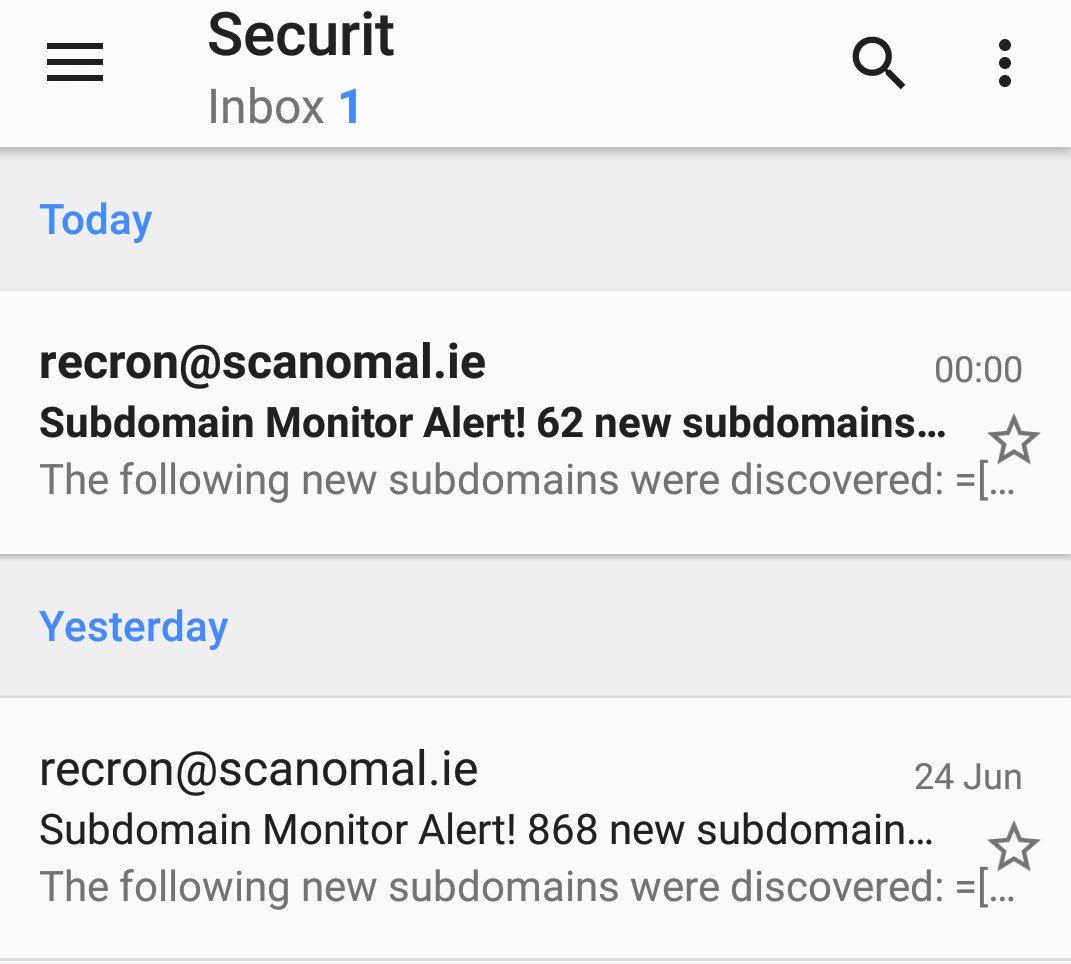

The basic principle here is that no information is lost once it’s collected. Through cronjobs and continuous enumeration, it can be deployed against a target for the duration of a penetration test or as an ongoing monitoring service to manage assets from an offensive perspective in an ongoing way. The tool allows data or information to be manually added and updated, with changes in infrastructure updating automatically on a continuous basis and being stored or tracked over time. It’s possible to implement automatic alerting for these changes too. However I mostly just want a clear snapshot of the external network infrastructure as it is.

The continuous monitoring and alerting feature set I use and strive for are the following:

⦁ Subdomain discovery, brute forcing and enumeration

⦁ Network edge-expansion, identifying unexplored IP ranges and domains

⦁ Port scans, banner capturing and enumeration

⦁ Web application enumeration via brute forcing and discovery scans

Service and Application Identification

As an example a domain is added to the database via a CLI tool, a continuous process adds this new domain to the task queue. This information is picked up by the multiple worker nodes and the domain is fed into multiple domain discovery tools like subfinder (https://github.com/subfinder/subfinder ) and IP information is retrieved from various current and historical API’s and data-sets like those at scans.io (https://scans.io/). The unique output of these tools is then added back into the database via the API and the process is repeated.

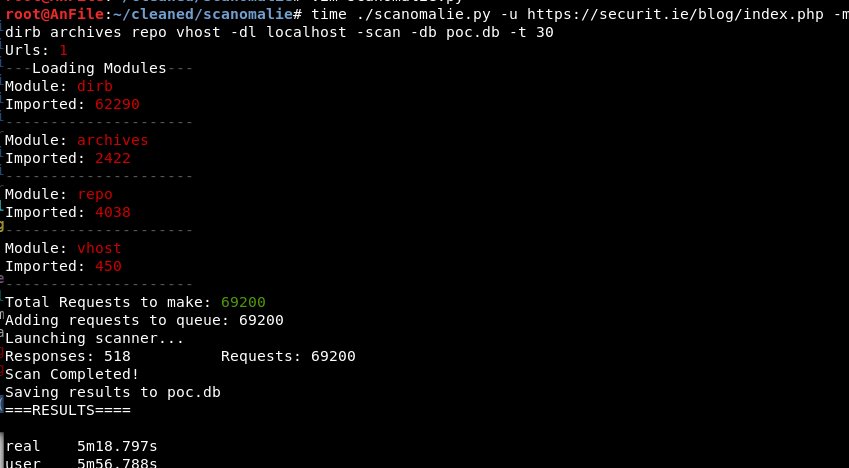

This continuous feedback loop has endless possibilities and allows for the smarter discovery of otherwise hard to find assets. For example one of the tasks uses an excellent tool called altDNS (https://github.com/infosec-au/altdns) which allows the generation of alterations and permutations of subdomains. We can feed this tool a single domain/subdomain, a dictionary, all the subdomains discovered thus far for the target via our API and any other common subdomain brute force lists deemed useful. A brute force of this generated list with massdns doesn’t take longer than 10 minutes (https://github.com/blechschmidt/massdns). This ensures the rapid discovery and enumeration of new assets, subdomains or potential virtual hosts, when you increase the number of client nodes.

Other things we can do is brute the subdomains of subdomains, whereby example.blah.test.domain.com can be brute forced at the following locations:

⦁ *.example.blah.test.domain.com

⦁ *.blah.test.domain.com

⦁ *.test.domain.com

⦁ *.domain.com

For large companies it should be obvious how this increased attack surface is especially useful with regards to bug bountying. The results of this technique speak for itself.

The screenshot above is new subdomains discovered on top of what was found with traditional tooling. Another module of recron involves network edge expansion. This for example takes all the IP addresses currently in the database and identifies the network ranges that have multiple subdomains already identified within a /24 block, it then does a reverse lookup of all IPs in that network and checks SSL certificates for any additional domains. Flagging other newly discovered domains as potentially owned by the same target organisation, this is less useful with modern cloud based services but is good for company owned network blocks and can reveal new areas or domains to explore or allow new data to be added into the discovery process.

Reusing all the information stored, presents me with other interesting opportunities. A more recent example I’ve explored, is taking a list of IP addresses that were resolved from the target company domains. A task then runs a vhost brute force against these IPs using a list of all subdomains discovered thus far. This can reveal old/alternative web apps that were never removed from the company owned IPs in question and open up more additional attack surface. This has on multiple occasions revealed web applications for subdomains that didn’t have current DNS entries.

Another extremely useful module is one to run network scans against the identified IPs, this is also a continuous or daily process and takes an IP from the database, adding it to the task queue. I usually run masscan (https://github.com/robertdavidgraham/masscan) and then Nmap against a target with the -A flag so it also runs additional information gathering scripts. The results are then imported via the API and added to a host allowing for asset exploration via the management dashboard.

The idea is to make useful information accessible as quickly as possible and available in order to make it actionable. Another component of this automated recon framework is a tool I just recently “finished”. I based it on a few older tools of mine that dumped directory brute force scans into elasticsearch (called scantastic), I wanted to minimize the overhead of my infrastructure however and SQLite works perfectly fine in this case.

I’m releasing the latest tool that was part of the framework and it’s called scanomaly. The intent of this was to combine multiple tools into one flexible tool and store all the data for single targets in a databases. Using the module based system it also allowed me to rapidly script prototypes for new attacks and run them across a network.

This allows me to compare databases and generate alerts based on new content that has appeared on web servers since the last scan and also carry out general fuzzing. There isn’t a public user interface for the output of this tool yet but I may just copy some of the stuff from the management flask app in the screenshot above. I want the visual elements to go along with the anomaly detection tools, based on the response information stored in the responses table. I’ll likely do another blog post on how to use this tool with examples once I’ve the user side of it finished.

This post is getting too long so I’m going to call it before talking about exploitation and escalation …

It’s good to be back among the blogging world

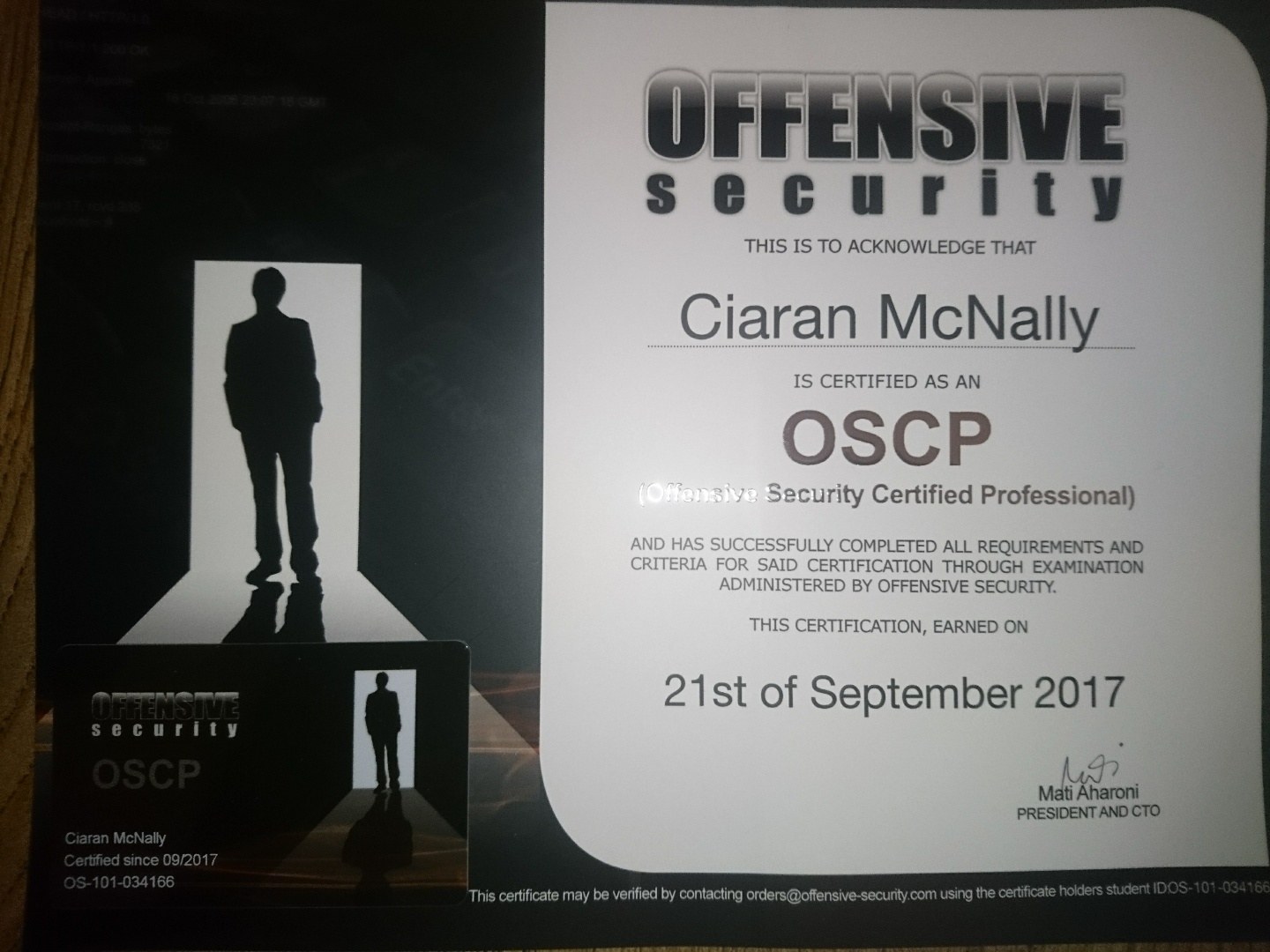

Posted on September 24, 2017

OSCP Certification

Given I have been working in information security for the past few years, I became well aware of the different certifications available as a means of professional development. The certification that stood out as gaining the most respect from the security community seemed to be the “(OSCP) Offensive Security Certified Professional” certificate, I witnessed this time and time again in conversations online. The reason often given is that it is a tough 24 hour practical exam vs a multiple choice questionnaire like many other security certificates. The OSCP is also listed regularly as a desirable requirement for many different kinds of infosec engineering jobs.

I recently received confirmation that I have successfully achieved this certification. To anyone interested in pursuing the OSCP, I would completely encourage it. There is no way you can come away from this experience without adding a few new tricks or tools to your security skills arsenal and aside from all of that, it’s also very fun. This certificate will demonstrate to clients or to any potential employer that you have a good wide understanding of penetration testing with a practical skill-set to back up the knowledge. I wanted to get this as I’ve had clients in the past not follow up on using my services due to me not having any official security certificates (especially CREST craving UK based customers). Hopefully this opens up some doors to new customers.

Before undertaking this course I already had a lot of experience performing vulnerability assessments and penetrations tests, I also had a few CVEs under my belt and have been quite active in the wider information security community by creating tools, taking part in bug bounties and being a fan of responsible disclosure in general. I found the challenge presented by this exam to be quite humbling and very much a worthwhile engagement.

I would describe the hacking with kali course materials and videos as very entry-level friendly which is perfect for someone with a keen interest looking to learn the basics of penetration testing. The most valuable part of the course for those already familiar with the basics is the interactive lab environment, this is an amazing experience and it’s hard not to get excited thinking about it. There were moments of frustration and teeth-grinding but it was a very enjoyable way to sharpen skills and try out new techniques or tools.

I signed up for the course initially a full year ago while working full time on contracts and found it extremely difficult to find the time to work on the labs as I had multiple ongoing projects and was doing bug bounties quite actively too. I burnt out fairly quick and didn’t concentrate on it at all. I did one or two of the “known to be hard” machines in the labs fairly easily which convinced me I was ready and sat the exam having compromised less than 10 of the lab hosts. This was of course silly and I only managed 2 roots and one local access shell which wasn’t near enough points to pass and very much dulled my arrogance at the time. I didn’t submit an exam report and decided to focus on my contracts and dedicate my time to the labs properly at a later date.

Fast forward over a year later to the start of this month (September) and I had 2 weeks free that I couldn’t get contract work for. So I purchased a lab extension with the full intention of dedicating my time completely to obtaining this certificate. In the two weeks I got around 20 or so lab machines and set the date for my first real exam attempt. This went well but I didn’t quite make it over the line. I rooted 3 machines and fell short of privilege escalating on a 4th windows host. I was so close and possibly could have passed if I did the lab report and exercises, however this time around I wasn’t upset by the failure and became more determined than ever to keep trying. I booked another 2 weeks in the labs, focused on machines with manual windows privilege escalation and booked my next exam sitting, successfully nailing it.

As I had learned a lot of penetration testing skills doing bug bounties, I found that it was very easy to identify and gain remote access to the lab machines, I usually gained remote shell access within the first 20 or 30 minutes for the large majority of the attempted targets. I very quickly found out that my weakest area was local privilege escalation. During my contract engagements, it is a regular occurrence that my clients request I don’t elevate any further with a remote code execution issue on a live production environment. This activity is also greatly discouraged in bug bounties so I can very much see why I didn’t have much skill in this area. The OSCP lab environment taught me a large amount of techniques and different ways of accomplishing this. I feel I have massively skilled up with regard to privilege escalation on Linux or Windows hosts.

I’m very happy to join the ranks of the (OSCP) Offensive Security Certified Professionals and would like to thank anyone who helped me on this journey by providing me with links to quality material produced by the finest of hackers. Keeping the hacker knowledge sharing mantra in mind, below is a categorized list of very useful resources I have used during my journey to achieving certification. I hope these help you to overcome many obstacles by trying harder!

Mixed

https://www.nop.cat/nmapscans/

https://github.com/1N3/PrivEsc

https://github.com/xapax/oscp/blob/master/linux-template.md

https://github.com/xapax/oscp/blob/master/windows-template.md

https://github.com/slyth11907/Cheatsheets

https://github.com/erik1o6/oscp/

https://backdoorshell.gitbooks.io/oscp-useful-links/content/

https://highon.coffee/blog/lord-of-the-root-walkthrough/

MsfVenom

https://www.offensive-security.com/metasploit-unleashed/msfvenom/

https://netsec.ws/?p=331

Shell Escape Techniques

https://netsec.ws/?p=337

https://pen-testing.sans.org/blog/2012/06/06/escaping-restricted-linux-shells

https://airnesstheman.blogspot.ca/2011/05/breaking-out-of-jail-restricted-shell.html

https://speakerdeck.com/knaps/escape-from-shellcatraz-breaking-out-of-restricted-unix-shells

Pivoting

http://www.fuzzysecurity.com/tutorials/13.html

http://exploit.co.il/networking/ssh-tunneling/

https://www.sans.org/reading-room/whitepapers/testing/tunneling-pivoting-web-application-penetration-testing-36117

https://highon.coffee/blog/ssh-meterpreter-pivoting-techniques/

https://www.offensive-security.com/metasploit-unleashed/portfwd/

Linux Privilege Escalation

https://0x90909090.blogspot.ie/2015/07/no-one-expect-command-execution.html

https://resources.infosecinstitute.com/privilege-escalation-linux-live-examples/\#gref

https://blog.g0tmi1k.com/2011/08/basic-linux-privilege-escalation/

https://github.com/mzet-/linux-exploit-suggester

https://github.com/SecWiki/linux-kernel-exploits

https://highon.coffee/blog/linux-commands-cheat-sheet/

https://www.defensecode.com/public/DefenseCode_Unix_WildCards_Gone_Wild.txt

https://github.com/lucyoa/kernel-exploits

https://www.rebootuser.com/?p=1758

https://www.securitysift.com/download/linuxprivchecker.py

https://www.youtube.com/watch?v=1A7yJxh-fyc

https://www.youtube.com/watch?v=2NMB-pfCHT8

https://www.youtube.com/watch?v=dk2wsyFiosg

https://www.youtube.com/watch?v=MN3FH6Pyc_g

https://www.slideshare.net/nullthreat/fund-linux-priv-esc-wprotections

https://www.exploit-db.com/exploits/39166/

https://www.exploit-db.com/exploits/15274/

Windows Privilege Escalation

https://blog.cobaltstrike.com/2014/03/20/user-account-control-what-penetration-testers-should-know/

https://github.com/foxglovesec/RottenPotato

https://github.com/GDSSecurity/Windows-Exploit-Suggester/blob/master/windows-exploit-suggester.py

https://github.com/pentestmonkey/windows-privesc-check

https://github.com/PowerShellMafia/PowerSploit

https://github.com/rmusser01/Infosec_Reference/blob/master/Draft/ATT%26CK-Stuff/Windows/Windows_Privilege_Escalation.md

https://github.com/SecWiki/windows-kernel-exploits

https://hackmag.com/security/elevating-privileges-to-administrative-and-further/

https://pentest.blog/windows-privilege-escalation-methods-for-pentesters/

https://toshellandback.com/2015/11/24/ms-priv-esc/

https://www.gracefulsecurity.com/privesc-unquoted-service-path/

https://www.commonexploits.com/unquoted-service-paths/

https://www.exploit-db.com/dll-hijacking-vulnerable-applications/

https://www.youtube.com/watch?v=kMG8IsCohHA&feature=youtu.be

https://www.youtube.com/watch?v=PC_iMqiuIRQ

https://www.youtube.com/watch?v=vqfC4gU0SnY

https://www.exumbraops.com/penetration-testing-102-windows-privilege-escalation-cheatsheet/X

https://www.fuzzysecurity.com/tutorials/16.html

http://www.labofapenetrationtester.com/2015/09/bypassing-uac-with-powershell.html

Posted on June 29, 2016

Freelance Security Consulting!

I recently did an interview for a Magazine about bug bounties and hacking Pornhub. When I’m not targeting large multi-million dollar organisations directly through bug bounty programs, I perform security assessments on behalf of small to medium size enterprises.

Contracts take up a larger amount of my time, as a freelance consultant it can be hard to advertise this work given that I keep my clients and the work I do strictly confidential. I use responsible disclosure and bug bounty programs as a means of advertising my skill-set and hopefully as a means of getting attention for the regular consulting services I provide. I’m available for short term contracts, no shorter than a day and no longer than a month.

My rates are quite cheap in comparison with standard prices one usually expects to pay for security consulting or penetration testing. The reason for this is to make my services more attractive to smaller companies or websites (they need protection too and often can’t afford it).

I’m also still running a special cheap rate for customers based in Ireland. My slightly alternative Penetration Test is essentially a more old-school style, 2 week, multifaceted penetration test. This type of testing is harder to come by these days.

The aim of this testing is to identify the most likely security issues to be exploited by a malicious attacker to ruin or damage your business, looking at your business as a whole. This includes taking a look at all your online assets and also includes performing controlled malware or phishing campaigns. The goal here is to determine what the most likely attacks facing your particular business model are and to help you resolve or mitigate them. It can help identify issues your staff also need to be aware of. Often organisations don’t realize how much information about their assets or internal company structure an attacker can gleam from public resources. This testing gives a very good overview of where your primary security risks are and can help you to prioritize your efforts.

Alternative 2 week Penetration Test: €4000

Daily Consulting Rate: €650-850

My prices are generally cheaper for smaller businesses and if I don’t find severe issues.

Contact info@securit.ie with any questions, it costs nothing to be curious!

Posted on April 11, 2016

Zero Days CTF – Dublin 2016

I attended the second ever Zero Days CTF (capture the flag) event recently. It was setup and organised by lecturers in the Institute of Technology, Blanchardstown who run the Cyber Security and Digital Forensics course. The event was also sponsored by Amazon, Integrity360 and Rits Information Security. ITB is home to one of the few 3rd level security courses available in Ireland. The event was primarily aimed at students and is, as of now, the biggest event of its kind to be ran in Ireland as far as I’m aware, with almost 40 teams of 4 taking part.

As someone who follows CTF TIME quite regularly, I’ve done quite a few challenges from the top level Capture the Flag events around the world, the competition is high and the standard is difficult for the majority of these events. I often recommend that people check them out or follow the github of CTF write-ups as a means of learning some cool new shit.

It was however nice to see that the difficulty of the challenges in the Zero Days CTF were adjusted to make it more fair for participants of all levels. They did however seemingly have an increased level of difficulty on the challenges I had seen from the 2015 event which is a positive thing for all involved. There were also a number of teams of professionals already working in the industry taking part. I would have loved to have events such as this back when I was a student, it serves to point newcomers in the security world towards some very interesting areas and certainly provides extra opportunities to put some of your information security knowledge, theory and techniques into practice.

Our winning team ‘popret‘ was composed of Conor Quigley, Denis Isakov, Serge Bazanski and myself (Ciaran McNally). We are all currently working in the security industry in Dublin and are fond of a challenge. We put into action some good team and collaboration techniques that helped us knock off many of the challenges early before anyone else managed to solve them, ensuring we maximized our points. We used IRC and also a shared paste-pad to help speed up our solutions by documenting any work done so far and to make sure we weren’t simultaneously working on the same challenges.

We were awarded some 7″ Android Tablets for our effort! I’d like to thank all who put the work in to set up such a fun event and also encourage people to attend events like this into the future as it’s fundamental to growing the quite small Irish information security community. Events like this are excellent networking opportunities and are a good place to spot tech talent fresh out of college. Hopefully we see plenty more of these events into the future…

Hack the Planet.

Posted on October 9, 2015

Daggercon 2015

Daggercon is a security conference that took place for the first time this year out in west Dublin on the IBM campus. It was free to attend and was definitely one of the biggest events of its kind that I’ve seen in Ireland. The event was ran very smoothly and with a real community spirit that hopefully helps grow communication within the Irish information security scene. All areas of the security community were represented, from hobbyist to corporation.

After the CTF I nervously did my talk but was delighted to get good feedback and questions from the attendees. The topic for my talk was Bug bounties, hopefully I helped to raise awareness of them or gave useful tips in how to get involved or started with them . The slides for this talk are available at the following location slideshare.net/securitie/bug-bounties-cn-scal.

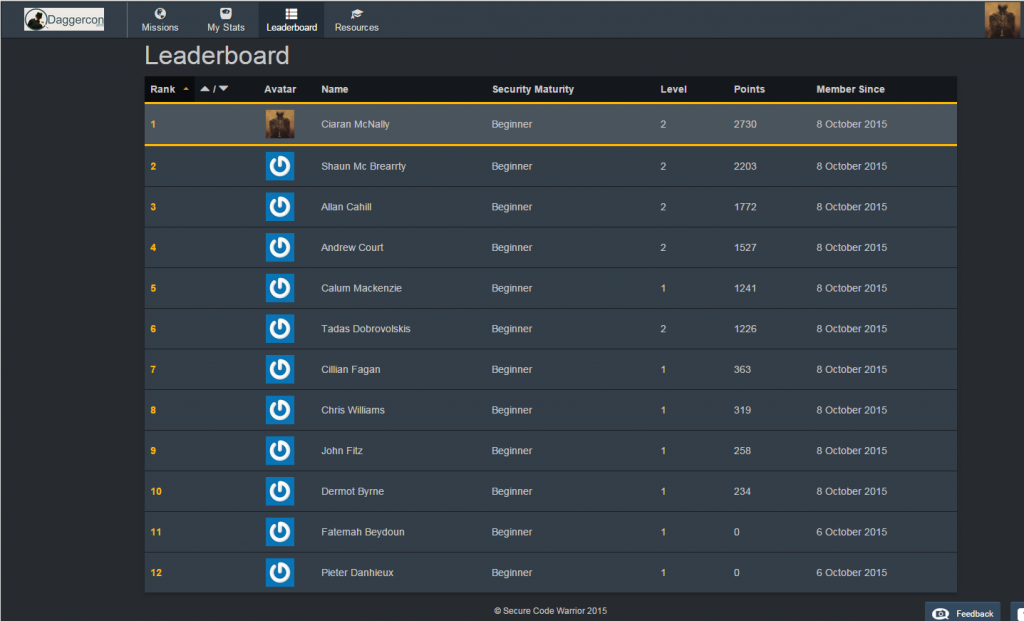

I then also took part in a “Secure Coding” competition that leveraged a very interesting platform by the name of Secure code Warrior. This gamified the reviewing of source code and finding security issues in JSP web applications from static analysis. It was definitely more fun than you would expect for a learning platform.

I ended up winning this competition too and being presented with a new Amazon Echo, these devices aren’t available in Ireland at the moment. All in all it was an excellent event and I hope to see it continued in 2016!

Posted on September 18, 2015

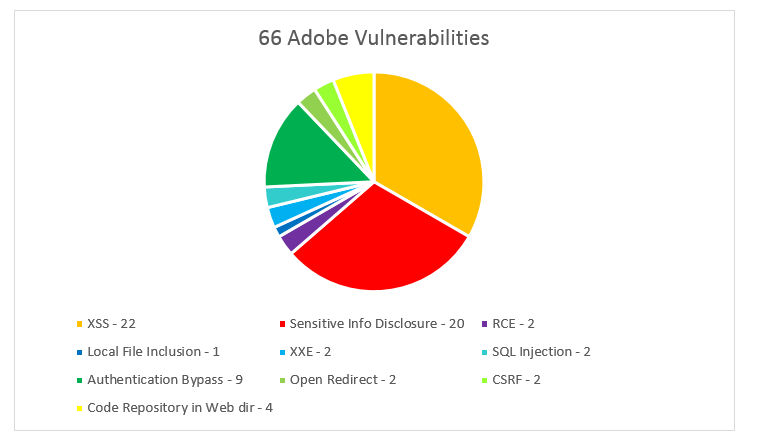

Hacking Adobe for free

Over the past few months I’ve dedicated approximately 24 hours in total to the Adobe Responsible Disclosure program. I am currently the leader of this bounty program by a significant margin, this is however mostly attributed to the fact that the program offers no cash incentive for bounty hunters. I was informed that they do however run private bounty programs on occasion for cash rewards. I set myself a personal goal of submitting 100 bugs and then planned to do a public disclosure of all the issues I discovered. This was primarily meant as a means of advertising the security consulting services I provide as a freelancer.

This plan however went a bit sour, as Adobe requested I keep the details of the issues I responsibly disclosed private, which I feel they are fully entitled to do, so what I have decided to do instead is this blog post. This will outline from a high-level the kind of issues I’ve been finding on their web services. This data is based on the first 66 vulnerabilities I submitted.

As you can see in the pie chart below, 33% of all the bugs I submitted were Cross Site Scripting (XSS) vulnerabilities. It’s easy to understand that XSS is still very widespread and will remain a common web security problem in the OWASP top 10 for a long time.

Another 30% of the issues I found were “Sensitive Information Disclosure”, these varied widely and included such things as finding web logs in the web directory, configuration files, misconfigurations that allowed source code to be downloaded and even a public-private key pair in a web directory.

Some of the most severe issues I identified were authentication bypasses or privilege escalation bugs. These allowed administrative access to various content management systems belonging to some of Adobe’s key services. A lot of these were achieved by accessing administrative panels directly that had broken authentication, through finding hidden registration forms or simply misconfigured permissions. These accounted for 14% of the findings.

I discovered quite a lot of other critical issues that could or do allow the leaking of a lot of sensitive data. Remote code execution, SQL Injection, Local File inclusion and XXE are the kind of vulnerabilities that would generally reward handsomely on a paid bounty program as issues like this could cost a company millions if the information was in the wrong hands. I also found multiple code repositories available in web directories. These critical issues accounted for 15% of the 66 bugs I reported.

There are definitely some interesting contrasts to be drawn between the security of some of the paid bounty programs and that of Adobe. There seems to be a lot more obvious and “low-hanging” issues throughout the Adobe web services. This could mean Adobe have a lower threshold of difficulty so is a perfect target for some new bounty hunters.

This gives me hope, as it’s becoming clearer that the bug bounty community knows the value inherent in what we do. Companies should be forced to realise that even a small cash incentive can go a long way in convincing the community to look a little deeper at your bug bounty program. Even at $20 a vulnerability, the 66 issues I submitted would pay my months’ rent. Regardless of what way you look at it, its a billion dollar organisation being cheap. Once I hit my 100 bug goal I won’t be looking at their stuff any further without a cash incentive.

I am undercutting the bounty communities “No Free Bugs” motto simply as a means of trying to get myself a bit more contract work and for that I am sorry. I see bug bounties as something the world needs right now. They provide a great means for young infosec students to break into the industry and get a few notches of experience on their CV, all while earning a bit of pocket money for the effort.

Many people like myself are seeing bounties as a means of making consistent income so they can work for themselves or even fill in the spaces between contract work as a freelancer. Bug bounties present an excellent opportunity for beginners to practice their practical skills on real systems, this is much more valuable as “industry experience” than that of Capture the Flag events you may get in universities for example; you are getting real experience in reporting the issues found.

Bug bounties are extremely valuable to the companies leveraging them too, they give incentive for a defensive security team to be pro-active about defending the companies systems. I imagine they have revolutionised the way the internal incident handling is performed or at least improved or greatly reduced the turn-around time for resolving security issues as the programs become more mature.

Posted on September 8, 2015

An Alternative Penetration Test – The “Mr.Robot Special”

One thing I abhor that you will find as standard in the security industry is the two to three day Penetration Test. These undertakings can of course greatly help improve an organisations security posture but it seems more like a box-ticking activity. The only reasonable outcome being that the bar is raised just enough so that a passing script-kiddie loses interest and moves on, or that the most obvious severe issues are remediated. There are of course other factors at play such as costs, deadlines and compliance testing but the previous sentence remains a problem as it is still true.

Companies need to embrace that a security assessment is something they should come away from with fear. The result of your penetration test should be a solid list of real attack scenarios your company could face (or will) that needs to be defended against. If your organisation doesn’t feel threatened by the results or feel like they have been outsmarted, then the security assessment isn’t a real reflection of the real world. The price you are paying isn’t worth the resulting report.

In the real world, malicious actors will use any means necessary to benefit from the shortcomings of your enterprises security. This could be for financial gain, through stealing information or simply through complete destruction of your assets because they disagree ideologically with what you do.

I found the recent series of Mr.Robot to be fantastic, it accurately portrayed many of the multifaceted methods deployed by malicious actors to infiltrate and destroy even an extremely large enterprise. Understandably this is Science Fiction and the outcome is quite far-fetched, but the techniques demonstrated are not. Attacks similar in nature are regularly used against organisations and the regular Penetration Testing methodology of reconnaissance, analysis, vulnerability assessment and execution is demonstrated in full.

In light of this fantastic show and having the freedom to try new things as a freelancer, I would like to announce my “Mr.Robot Special”. This is a full, multifaceted, two week Penetration Test for the price you would regularly pay for a traditional three day one. I get excited at any opportunity to work like a secret agent and play with all my gadgets and custom tools. This offer is only open to organisations in Ireland for the moment. Please do get in touch! ( info@securit.ie )

As my perspective has changed on the value of traditional penetration tests, I would like to also challenge the standard. A single person of course does not possess the same skills you may find in a good red-team style penetration test. Very rarely, if ever, do you get that style of attack in a standard operation. I feel there is inherent value in having a single actor perform a concentrated attack on your organisation or network, as the drive to succeed is increased as there is no illusion of a team to hide failure.

If I can highlight to you the damage a single person could potentially do to your organisation, it should be easier to imagine the risk posed by an internet full of malicious actors, let alone a nation-state level attack or other advanced persistent threat. With a clear view of the threat you face on a daily basis, cost-effective strategies can be developed to help mitigate these risks.